Stream processing made easy with Apache StreamPipes

Space Flyby

You don't need to be a stream processing expert to create useful custom solutions with Apache StreamPipes. We'll use StreamPipes to build a simple app that calculates when the International Space Station will fly overhead.

Our modern world is increasingly dependent on continuous data streams that generate large volumes of data in real time. These streams might come from science experiments, weather stations, business applications, or sensors on a factory shop floor. Many of the software systems that interact with these data streams follow an architecture in which events drive individual components. Continuous data sources (producers) such as sensors trigger events, and various components (consumers) process them. Producers and consumers are decoupled using a middleware layer that handles the distribution of the data, usually in the form of a message broker. This approach reduces complexity, because any number of services can receive and process incoming data streams virtually simultaneously. This flexible architecture gives rise to a new generation of tools that provide users with an easy way to create custom solutions that process data from incoming streams. One example is the open source framework Apache StreamPipes [1].

StreamPipes has been an incubator project at the Apache Software Foundation since November 2019 and is part of a growing number of solutions for the Internet of Things (IoT). The StreamPipes toolbox [2] is aimed at business users with limited technical knowledge. The main goal is to make stream-processing technologies accessible to nonexperts. Various modules are available to connect IoT data streams from a variety of sources, to generate analyses of these data streams, and to examine live or historical data.

StreamPipes offers a variety of connectors and algorithms for analyzing industrial data, with the focus on integrating data from the production and automation environment. But users without access to their own production line can also benefit from StreamPipes: For example, real-time data from publicly available APIs and widely used protocols such as MQTT can be used to connect existing data sources.

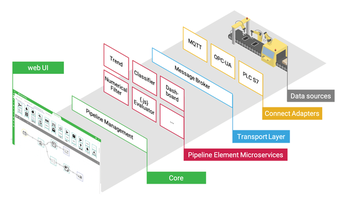

One important StreamPipes component is the Pipeline Editor. Users can rely on graphical, dataflow-oriented modeling to independently generate processing pipelines that the underlying stream processing infrastructure then automatically executes. On the application side, StreamPipes is useful for applications such as continuous monitoring (e.g., condition monitoring), detection of time-critical situations, live computation of key performance indicators, and integration of machine learning models. Figure 1 provides a rough overview of StreamPipes, from data connection, processing, and analysis through to deployment.

Stream Processing Made Easy

Figure 2 shows the different layers of the StreamPipes architecture. Most users will want to connect existing data streams in the first step. For this purpose, StreamPipes provides a library with the StreamPipes Connect module to connect data based on standard protocols or certain special systems already supported by StreamPipes. Connect adapters, which can also be installed on lightweight edge devices such as Raspberry Pis, handle the task of collecting and forwarding data streams to the internal message broker – Apache Kafka is used under the hood. In the Connect adapters, users can define their own transformation rules (e.g., to convert value units).

One layer above the transport layer are reusable algorithms (e.g., for detecting statistical trends, preprocessing data, or image processing), each of which encapsulates a specific function and is available as an event-driven microservice. In addition to algorithms, StreamPipes also provides data sinks in this way, such as connectors for databases or dashboards.

Each individual microservice provides a machine-readable description of the algorithm's requirements and functionality. For example, certain required data types or measurement units can be specified that the data stream must provide to initialize the component. The algorithm kit can be extended at runtime with a software development kit, so that the user can install additional algorithms at any time, when new requirements arise, without restarting the application.

Users interact with the web-based front end, which makes it easy to build pipelines by linking data streams with algorithms and data sinks. In contrast to other graphical tools for modeling data flows, StreamPipes integrates a matching component directly into the core application. This component continuously checks the consistency of processing pipelines while the model is being built and relies on semantic checking to prevent modeling of faulty connections.

From Data to Application in a Few Clicks

For an example of a simple StreamPipes application, consider the International Space Station (ISS). Scientists rely on an open API to determine the current position of the ISS in its orbit around the Earth. The goal of the StreamPipes application will be to calculate other key figures based on incoming data and display the results on a live dashboard.

First you will need to install StreamPipes. The easiest way to set up StreamPipes is to use a Docker-based installation (Listing 1), which downloads and starts all the required components. Both Docker and Docker Compose must be present on the system; Docker needs a RAM allocation of 2 to 3GB.

Listing 1

Install and Launch StreamPipes

# download and unzip latest release from streampipes.apache.org/download.html $ cd incubator-streampipes-installer/compose $ docker-compose up -d

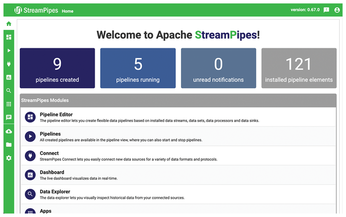

During the initial installation, the Docker images for StreamPipes and other images used in the background (for example, Apache Kafka) are loaded. Once the system is started, you can complete the setup in a web browser. By default, the interface is accessible on port 80. After you log in with your choice of user credentials (they are only saved locally), the StreamPipes welcome page appears (Figure 3).

Simple IoT Data Connection with Connect

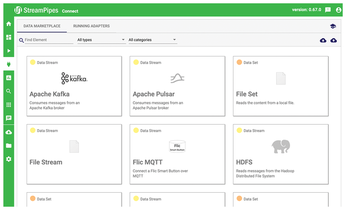

The first step is for the application to receive the position data of the ISS as a continuous data stream. For this purpose, you need to change to the Connect module. The data marketplace, which is now visible, shows you the existing adapters, each of which can be configured individually (Figure 4). For example, you will find generic adapters for MQTT, PLC controls, Kafka, or databases, as well as some specific adapters for source systems such as Slack. For this ISS application, I will use the preconfigured ISS Location adapter.

Each adapter has a wizard to configure the required parameters. In this case, the matching adapter generates an event with only three parameters: a timestamp and the coordinates of the current ISS location (latitude and longitude in WGS84 format).

At the end of the wizard, assign a name to the new adapter (here ISS-Location) and start the process. From now on, regular updates of the ISS position will reach the underlying Apache Kafka infrastructure. A quick look at the pipeline editor shows a new icon in the Data Streams tab.

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

Systemd Fixes Bug While Facing New Challenger in GNU Shepherd

The systemd developers have fixed a really nasty bug amid the release of the new GNU Shepherd init system.

-

AlmaLinux 10.0 Beta Released

The AlmaLinux OS Foundation has announced the availability of AlmaLinux 10.0 Beta ("Purple Lion") for all supported devices with significant changes.

-

Gnome 47.2 Now Available

Gnome 47.2 is now available for general use but don't expect much in the way of newness, as this is all about improvements and bug fixes.

-

Latest Cinnamon Desktop Releases with a Bold New Look

Just in time for the holidays, the developer of the Cinnamon desktop has shipped a new release to help spice up your eggnog with new features and a new look.

-

Armbian 24.11 Released with Expanded Hardware Support

If you've been waiting for Armbian to support OrangePi 5 Max and Radxa ROCK 5B+, the wait is over.

-

SUSE Renames Several Products for Better Name Recognition

SUSE has been a very powerful player in the European market, but it knows it must branch out to gain serious traction. Will a name change do the trick?

-

ESET Discovers New Linux Malware

WolfsBane is an all-in-one malware that has hit the Linux operating system and includes a dropper, a launcher, and a backdoor.

-

New Linux Kernel Patch Allows Forcing a CPU Mitigation

Even when CPU mitigations can consume precious CPU cycles, it might not be a bad idea to allow users to enable them, even if your machine isn't vulnerable.

-

Red Hat Enterprise Linux 9.5 Released

Notify your friends, loved ones, and colleagues that the latest version of RHEL is available with plenty of enhancements.

-

Linux Sees Massive Performance Increase from a Single Line of Code

With one line of code, Intel was able to increase the performance of the Linux kernel by 4,000 percent.