Of lakes and sparks – How Hadoop 2 got it right

Misconceptions

Hadoop version 2 has transitioned from an application to a Big Data platform. Reports of its demise are premature at best.

In a recent story on the PCWorld website titled "Hadoop successor sparks a data analysis evolution," the author predicts that Apache Spark will supplant Hadoop in 2015 for Big Data processing [1]. The article is so full of mis- (or dis-)information that it really is a disservice to the industry. To provide an accurate picture of Spark and Hadoop, several topics need to be explored in detail.

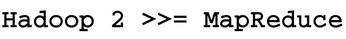

First, like any article on "Big Data," is it important to define exactly what you are talking about. The term "Big Data" is a marketing buzz-phase that has as much meaning as things like "Tall Mountain" or "Fast Car." Second, the concept of the data lake (less of a buzz-phrase and more descriptive than Big Data) needs to be defined. Third, Hadoop version 2 is more than a MapReduce engine. Indeed, if there is anything to take away from this article it is the message in Figure 1. And, finally, how Apache Spark neatly fits into the Hadoop ecosystem will be explained.

[...]

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

Fedora 42 Available with Two New Spins

The latest release from the Fedora Project includes the usual updates, a new kernel, an official KDE Plasma spin, and a new System76 spin.

-

So Long, ArcoLinux

The ArcoLinux distribution is the latest Linux distribution to shut down.

-

What Open Source Pros Look for in a Job Role

Learn what professionals in technical and non-technical roles say is most important when seeking a new position.

-

Asahi Linux Runs into Issues with M4 Support

Due to Apple Silicon changes, the Asahi Linux project is at odds with adding support for the M4 chips.

-

Plasma 6.3.4 Now Available

Although not a major release, Plasma 6.3.4 does fix some bugs and offer a subtle change for the Plasma sidebar.

-

Linux Kernel 6.15 First Release Candidate Now Available

Linux Torvalds has announced that the release candidate for the final release of the Linux 6.15 series is now available.

-

Akamai Will Host kernel.org

The organization dedicated to cloud-based solutions has agreed to host kernel.org to deliver long-term stability for the development team.

-

Linux Kernel 6.14 Released

The latest Linux kernel has arrived with extra Rust support and more.

-

EndeavorOS Mercury Neo Available

A new release from the EndeavorOS team ships with Plasma 6.3 and other goodies.

-

Fedora 42 Beta Has Arrived

The Fedora Project has announced the availability of the first beta release for version 42 of the open-source distribution.