Bonding your network adapters for better performance

Together

© Photo by Andrew Moca on Unsplash

Combining your network adapters can speed up network performance – but a little more testing could lead to better choices.

I recently bought a used HP Z840 workstation to use as a server for a Proxmox [1] virtualization environment. The first virtual machine (VM) I added was an Ubuntu Server 22.04 LTS instance with nothing on it but the Cockpit [2] management tool and the WireGuard [3] VPN solution. I planned to use WireGuard to connect to my home network from anywhere, so that I can back up and retrieve files as needed and manage the other devices in my home lab. WireGuard also gives me the ability to use those sketchy WiFi networks that you find at cafes and in malls with less worry about someone snooping on my traffic.

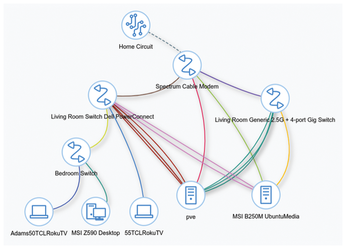

The Z840 has a total of seven network interface cards (NICs) installed: two on the motherboard and five more on two separate add-in cards. My second server with a backup WireGuard instance has 4 gigabit NICs in total. Figure 1 is a screenshot from NetBox that shows how everything is connected to my two switches and the ISP-supplied router for as much redundancy as I can get from a single home network connection.

The Problem

On my B250m-based server, I had previously used one connection directly to the ISP's router and the other three to the single no-name switch, which is connected to the ISP router from one of its ports. All four of these connections are bonded with the balance-alb mode, as you can see in the netplan config file (Listing 1).

Listing 1

Netplan Configuration File

network:

version: 2

renderer: networkd

ethernets:

enp6s0:

dhcp4: false

dhcp6: false

enp7s0:

dhcp4: false

dhcp6: false

enp2s4f0:

dhcp4: false

dhcp6: false

enp2s4f1:

dhcp4: false

dhcp6: false

bonds:

bond0:

dhcp4: false

dhcp6: false

interfaces:

- enp6s0

- enp7s0

- enp2s4f0

- enp2s4f1

addresses: [192.168.0.20/24]

routes:

- to: default

via: 192.168.0.1

nameservers:

addresses: [8.8.8.8, 1.1.1.1, 8.8.4.4]

parameters:

mode: balance-alb

mii-monitor-interval: 100

For those who are not familiar with the term, bonding (or teaming) is using multiple NIC interfaces to create one connection. The config file in Listing 1 is all that is needed to create a bond in Ubuntu. Since 2018 in version 18.04, Canonical has included netplan as the standard utility for configuring networks. Netplan is included in both server and desktop versions, and the nice thing about it is that it only requires editing a single YAML file for your entire configuration. Netplan was designed to be human-readable and easy to use, so (as shown in Listing 1) it makes sense when you look at it and can be directly modified and applied while running.

To change your network configuration, go to /etc/netplan, where you will see any yaml config file for your system. If you are running a typical Ubuntu Server 22.04 install, it will likely be named 00-installer-config.yaml. To change your config, you just need to edit this file using nano (Ubuntu Server) or gnome-text-editor (Ubuntu Desktop), save it, and run sudo netplan to apply the changes. If there are errors in your config, netplan will notify you upon running the apply command. Note that you will need to use spaces in this files (not tabs), and you will need to be consistent with the spacing.

In Listing 1, you can see that I have four NICs and all of them are set to false for DHCP4 and DHCP6. This ensures that the bond gets the IP address, not an individual NIC. Under the bonds section, I have made one interface called bond0 using all four NICs. I used a static IP address, and so I kept DHCP set to false for the bond also. Since I configured a static IP address, I also need to define the default gateway under the routes section, and I always define DHCP servers as a personal preference, though that part wouldn't be required for this config. The last section is where you define what type of bonding you would like to use, and I always choose to go with balance-alb or adaptive load balancing for transmit and receive, as it fits the homelab use case in my experience very well. See the box entitled "Bonding" for a summary of the available bonding options.

Bonding

Bonding options available for Linux systems include:

balance-rr– a round robin policy that sends packets in order from one to the next. This does give failover protection, but in my opinion, it isn't as good for mixed-speed bonds as some of the other options because there is no "thought" put into which NIC is sending packets. It's simply round robin, one to the next to the next ad infinitum.active-backup– simple redundancy without load balancing. You can think of this as having a hot spare. One waits till the other fails and picks up. This can add consistency if you have a flaky NIC or NIC drivers but otherwise is simply one NIC doing nothing for most of the time. This would be a good option, though, if you have a 10G primary NIC to use all of the time and a 1G NIC for backup in case it fails.balance-xor– uses a hashing algorithm to give load balancing and failover protection using an additional transmit policy that can be tailored for your application. This option offers advantages but is one of the more difficult policies to optimize.broadcast– sends everything from everywhere. While that may sound effective, it adds a lot of noise and overhead to your network and is generally not recommended. This is the brute force, shotgun approach. It offers redundancy but for most applications is wasteful of energy without necessarily offering a higher level of consistency.802.3ad– uses a protocol for teaming, which must be supported by the managed switch you are connecting to. That is its main pitfall, as it requires a switch that supports it. With 802.3ad, you would create link aggregation groups (LAGs). This is considered the "right" way to do it by folks who can always afford to do things the "right" way with managed switches. 802.3ad is the IEEE standard that covers teaming and is fantastic if all of your gear supports it.balance-tlb– adaptive load balancing; sends packets based on NIC availability but does not require a managed switch. This option offers failover and is similar tobalance-albwith one key difference: Incoming packets are simply sent to whatever NIC was last used so long as it is still up. In other words, this load balances on transmit but NOT on receive.balance-alb– the same asbalance-tlbbut also balances the load of incoming packets. This gives the user failover as well as transmit and receive load-balancing without requiring a managed switch. For me, this is the best option. I have not tested to see if there is a noticeable difference betweenbalance-albandbalance-tlb, but I suspect that for a home server and homelab use there won't be. I would recommend testing the difference betweenalbandtlbif using this in a production environment as there may be unintentional side effects to the extra work being done on the receive side in terms of latency of utilization.

The best schema for bonding in your case might not be the best for me. With that in mind, I would recommend researching your particular use case to see what others have done. For most homelab use where utilization isn't constantly maxed out, I believe you will typically find that balance-alb is the best option.

Findings

What I discovered was that setting up Proxmox with a dedicated port for WireGuard and the remaining ports bonded for all other VMs actually resulted in slower and less consistent speeds for WireGuard than what I had been getting on my previous B250m-based machine with bonded NICs. This is something which I didn't expect, but in retrospect, perhaps I should have.

The initial plan for my new gear was to use one NIC for management only, one for WireGuard only, and the remaining 5 NICs for all of my other VMs on the Proxmox server. My expectation was that having a dedicated NIC used only for the WireGuard VPN would help me to realize faster speeds but also more consistent speeds because the VPN would be independent of my other VMs' network performance. Although that would mean no redundancy for WireGuard on that individual machine, I didn't care, because I now had two servers running. If my new server went down, I could simply connect to the old one.

After experimenting with the configuration, I eventually discovered it was better not to put the VPN on a separate NIC but to use a single port for management only and to team the other 6 NICs in my Proxmox server as that resulted in the best speed and consistency running WireGuard, regardless of the fact that all of my other VMs are using that same bond. Figure 2 shows the configuration. You will see 10 NICs in Figure 2, but three of them are not running. This is an oddity of some quad-port cards in Proxmox. Run the following command to reload the network interface configuration on an hourly basis:

ifreload -a

This command ensures I get all six up and running, albeit with a "failure" each time I ifreload. (Note that it isn't actually a failure since those NICs don't actually exist. You might encounter this problem if you decide to use Proxmox with a bonded quad-port card.)

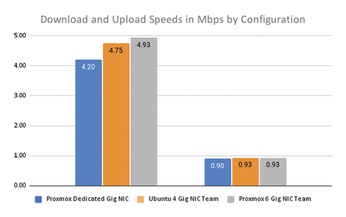

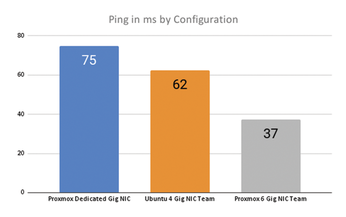

Results

Figures 3 and 4 show network speeds and ping times. You can see that by bonding the single NIC that was previously dedicated to WireGuard into a team with the other 5 NICs I was able to achieve better ping times and also better speeds. More importantly, the WireGuard speeds were very consistent. Across five runs, I only saw a variation of 0.05Mbps maximum with the six bonded NICs in Proxmox versus a variation of up to 0.45Mbps max in speed variance when using the dedicated NIC. With my previous four NIC B250M setup, the consistency was in the middle at about 0.34Mbps variance, but the speeds were about 0.2Mbps slower on average.

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

Systemd Fixes Bug While Facing New Challenger in GNU Shepherd

The systemd developers have fixed a really nasty bug amid the release of the new GNU Shepherd init system.

-

AlmaLinux 10.0 Beta Released

The AlmaLinux OS Foundation has announced the availability of AlmaLinux 10.0 Beta ("Purple Lion") for all supported devices with significant changes.

-

Gnome 47.2 Now Available

Gnome 47.2 is now available for general use but don't expect much in the way of newness, as this is all about improvements and bug fixes.

-

Latest Cinnamon Desktop Releases with a Bold New Look

Just in time for the holidays, the developer of the Cinnamon desktop has shipped a new release to help spice up your eggnog with new features and a new look.

-

Armbian 24.11 Released with Expanded Hardware Support

If you've been waiting for Armbian to support OrangePi 5 Max and Radxa ROCK 5B+, the wait is over.

-

SUSE Renames Several Products for Better Name Recognition

SUSE has been a very powerful player in the European market, but it knows it must branch out to gain serious traction. Will a name change do the trick?

-

ESET Discovers New Linux Malware

WolfsBane is an all-in-one malware that has hit the Linux operating system and includes a dropper, a launcher, and a backdoor.

-

New Linux Kernel Patch Allows Forcing a CPU Mitigation

Even when CPU mitigations can consume precious CPU cycles, it might not be a bad idea to allow users to enable them, even if your machine isn't vulnerable.

-

Red Hat Enterprise Linux 9.5 Released

Notify your friends, loved ones, and colleagues that the latest version of RHEL is available with plenty of enhancements.

-

Linux Sees Massive Performance Increase from a Single Line of Code

With one line of code, Intel was able to increase the performance of the Linux kernel by 4,000 percent.